In the world of logistics, the "last mile problem" refers to the final leg of a product's journey to its destination. Despite being the shortest part, it's often the most costly and complex due to factors like traffic, multiple drop-off points, and the need for personalized service.

Today, a similar "last mile problem" has emerged in the field of generative AI.

The challenges

At Sanka, we define the last mile problem in generative AI as a technically complex and often unsolvable challenge that arises when large language models (LLMs) interact with other apps and complete real-world tasks.

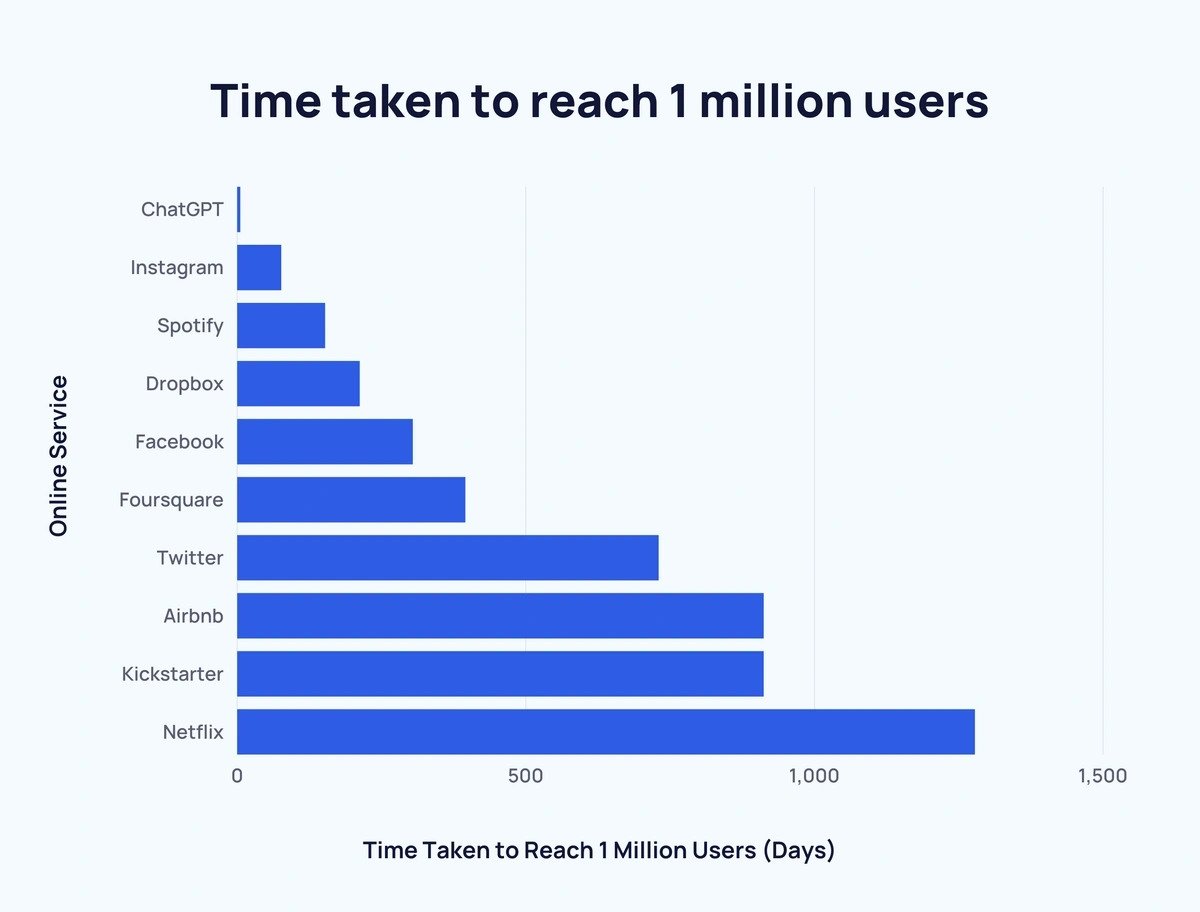

Generative AI, led by models such as OpenAI's GPT-3/4 and its application ChatGPT, has made significant strides in the tech world and beyond. ChatGPT had achieved a record-breaking feat by reaching 100 million active users just two months after launch.

Despite such impressive advancements, these AI systems face a challenge reminiscent of the last mile problem in logistics: seamlessly integrating with real-world applications to deliver the final product—completed tasks—to end users.

While LLM AI tools can generate human-like texts, it has no direct way to act in the real world. If you ask chatGPT to book a hotel or buy a gift on your behalf, it cannot do so directly.

Put more precisely, it lacks interfaces to websites, APIs, or physical systems. Users have to manually copy and paste generated texts into applications, a clunky workaround that severely limits its practical utility.

Aiming to streamline the process, LLM apps recently introduced “plugins”, which are essentially apps on their platforms; OpenAI’s ChatGPT, Google’s Bard, and Microsoft’s Bing all have plugins.

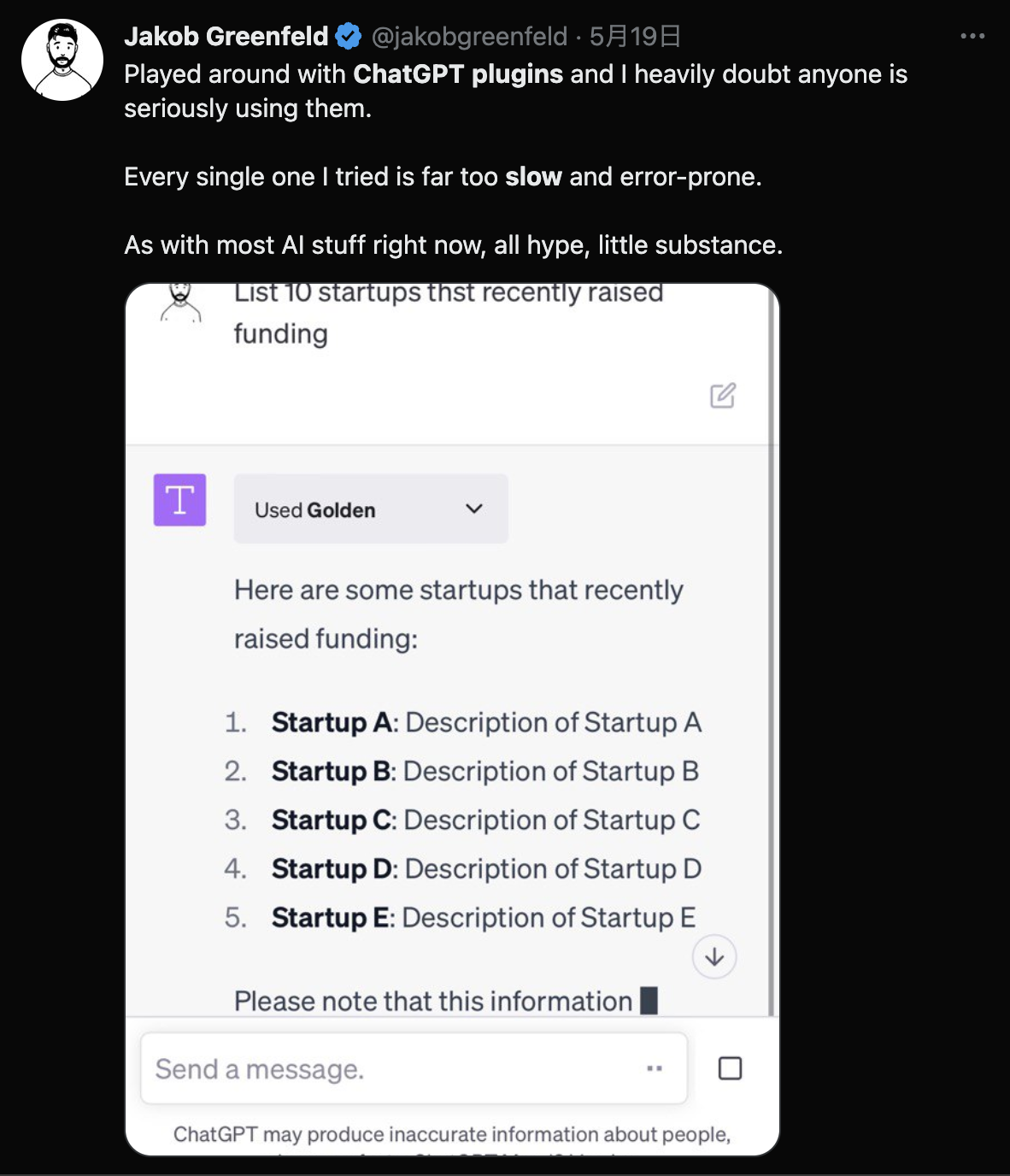

However, particularly with third-party integrations, the user experience is far from useful, let alone perfect.

After all, these plugins are labeled as “beta” so the experience will likely improve in the coming weeks. Yet, it's important to note that there's still much work to be done in order to fully address the challenges from a technical perspective.

In most cases, developers build plugins for the LLMs apps with the following data.

- Manifest file (JSON file) which describes plugin metadata and when/how to trigger actions

- API documents which explains the features and endpoints to execute commands

This is quite limited (we call them ”shallow integrations”) - No front end control which is an essential part of great UX nor an integration support with other plugins.

Also, without deeper integrations LLM needs to absorb tons of inputs from the plugin documents, therefore the chat return for a simple prompt can take more than 10 seconds.

The result is, as you expect, a slow chat bot with limited execution capabilities.

a tweet by Jakob Greenfeld

a tweet by Jakob Greenfeld

The Opportunity

This post is not about being pessimistic and nitpicking flaws of generating-defining tech. It’s the opposite.

We’re INSANELY excited about its future and wanted to lay out some of opportunities ahead .

The potential of AI tools like ChatGPT, Bard, and Anthropic’s Claude is just immense, as highlighted by Bill Gates. In his recent op-ed, Gates compared the revolution brought by generative AI to that of the graphical user interface in 1980, predicting massive changes in the coming decades across various sectors such as work, education, health care, and communication.

The commercial impact of AI tools is also evident. ChatGPT alone is projected to make $200 million in 2023 and $1 billion by 2024). People are finding value in these tools for tasks ranging from drafting emails to automating essays, and even generating content for news sites. The market size of LLM plugins can be even larger (image AppStore for the chat apps).

But as we illustrated above, this transformative power reaches a stumbling block when the LLM apps try to embed themselves into the applications that end users rely on every day.

This is why we believe solving the last mile problem is the biggest opportunity in the age of AI.

Just as Amazon strived to solve the last mile delivery problem, we believe thriving companies in the next decade will address the last mile problem in generative AI to fully unlock its potential and becomes the next trillion-dollar companies.

And the race has already begun.

In this week’s Microsoft Build, the software titan has announced Windows Copilot, where generative AI will seamlessly integrate with our everyday applications, becoming an integral part of our workflows rather than an isolated chat tool.

We’ll see more of these fundamental integrations in the coming weeks and months.

Sanka’s Role

The opportunities aren’t just for big companies.

SaaS and other tech companies can build plugins fairly quickly based on their tech stack. Creators, SMBs, and students will likely build their plugins too. These investments could play out well, given the distribution of LLM apps (already 100million+ users).

At Sanka, as a vertical iPaaS and automation platform, we are taking a slightly different approach because solving the last mile problem will require progress on multiple fronts. We’re;

- integrating apps with Sanka as widely and deeply possible so we can execute real world tasks efficiently (”deep integration” vs “shallow integration” of LLM apps). Sanka integrates with apps like ads, social media, CRMs, email to name a few but we’ll add more in the coming weeks.

- building micro-services to execute automations for our customers. These automation services will turn to be “plugins” that LLM apps can execute on their platforms.

- optimizing our own AI models (inputs:reasoning <> outputs:acting) to make our services more capable, reliable, and essentially useful.

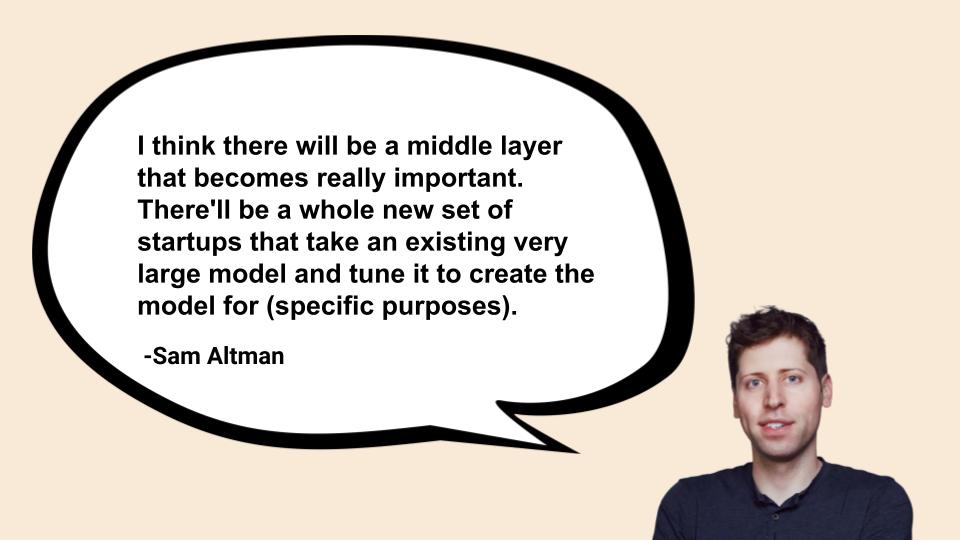

Sam Altman, the co-founder and CEO of OpenAI has emphasized the importance of middle layer between LLM and end-users. We agree with his view.

The last mile in GenAI - a Pandora's box or a golden goose?

Great potential comes great responsibility.

LLMs that can deeply connect with our daily applications and systems could run harmful programs or steal critical data. As we continue to develop and implement generative AI, we must also put in place the necessary guardrails and regulations to ensure that any downsides are far outweighed by its benefits.

It’s important for industry leaders, AI scientists, policymakers, and us people to discuss throughly and prioritize what matters. AI’s potential is so large that it could end up with the last innovation humanity needs, or a catastrophic damage to our lives.

At Sanka, we believe AI-powered apps - especially ones that solve the last mile problem - will bring huge benefits to humanity. While minding the both upsides and downsides, we will continue to solve the last mile problem and bring values to our customers.

As we take strides in this direction, we will not only be unlocking the full potential of generative AI but also paving the way for the next wave of innovation, which may include AGI. It's a thrilling future that's just within our reach.